Content Moderation

SDK

We provide a PHP SDK with an example of integration to make it easier and fast to get ready to moderate your content:

VerifyMyContent Content Moderation PHP SDK

Postman Workspace

We've created a Postman workspace specifically for our API, which contains example API calls that you can use to test and familiarise yourself with our API.

The workspace includes example API calls for each of our API endpoints, along with detailed descriptions of the request parameters and headers being used.

You can generate most of the client code to call our APIs using the Postman client code generator. With this feature, developers can select a range of programming languages, and generate the corresponding code with a few clicks.

Please note that while both the example API calls and the generated code can be a helpful starting point, it's important to thoroughly test your own API calls before deploying them in production. If you encounter any issues while using the example API calls, or if you have any questions about our API, please don't hesitate to reach out to our support team.

API Domain

Our API is designed to be used in two environments: a sandbox environment and a production environment.

The sandbox environment is intended for testing and development purposes, while the production environment is used for live data and real-world use cases.

To ensure the security and integrity of our API, we use separate API keys for each environment. This means that you will need to obtain different API keys for the sandbox and production environments, and should not use the same key for both.

| Domain | Environment | Purpose |

|---|---|---|

| https://moderation.verifymycontent.com | production | Testing and development |

| https://moderation.sandbox.verifymycontent.com | sandbox | Live data and real-world use |

Generating the HMAC header

To improve the security of the communication between your implementation and the VerifyMyContent API, we require you to generate a unique hexadecimal encoded SHA256 HMAC hash for each request, based on the input parameters.

The process of generating it depends on the language of your implementation.

If you use our SDK, the HMAC step will be abstracted,

and you don't need to do anything related to it.

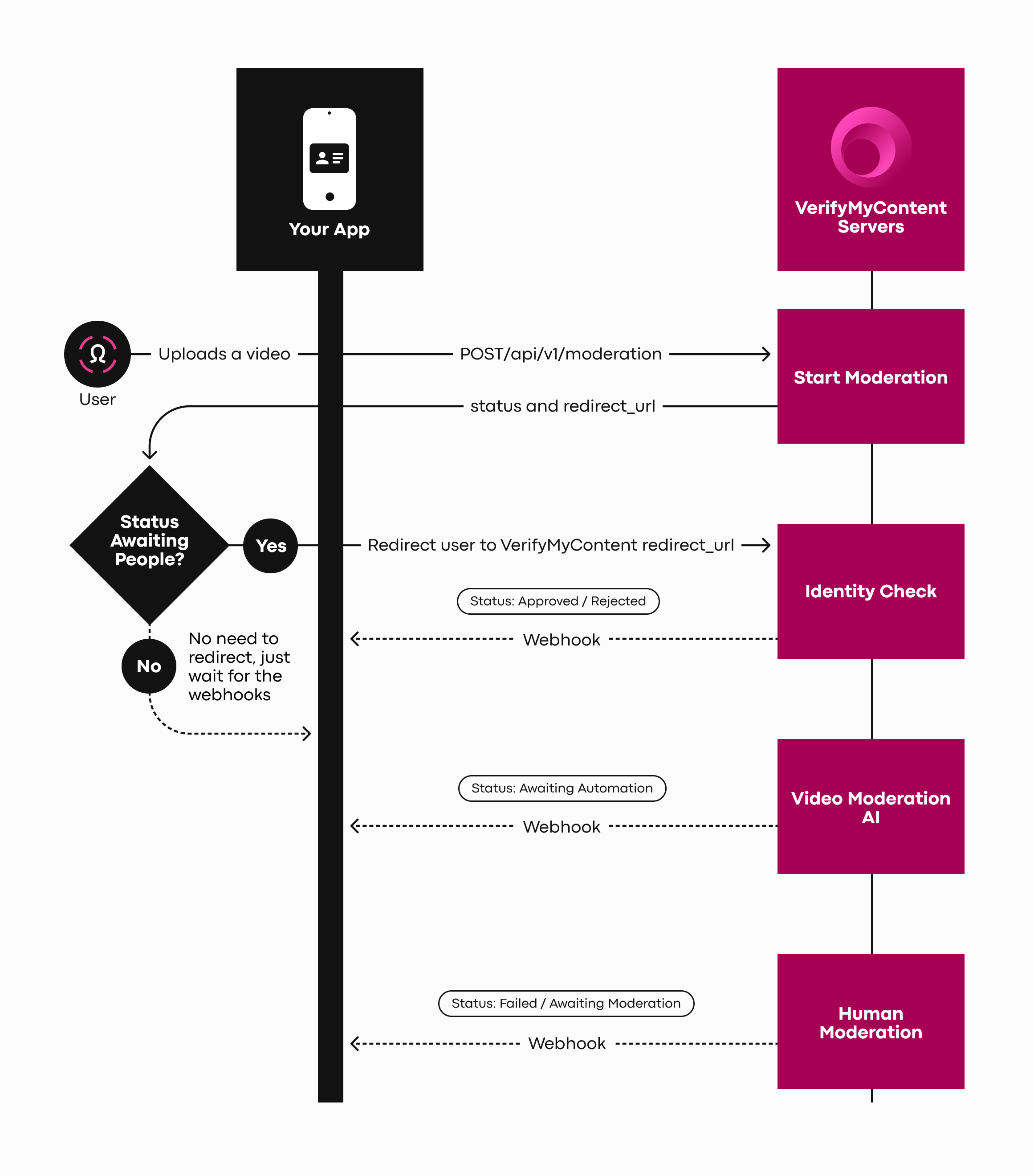

Integration Steps

The VerifyMyContent content moderation consists of 2 basic steps:

-

Verifying the uploader

-

Moderating the content itself

When you call the Start Moderation endpoint, it will take care of both steps, and you will receive the moderation status updates on the URL you've set as a webhook.

Alternatively, the Current status of the moderation endpoint is available for you to get the status, if you prefer not to use a webhook.

The meaning of each status

When you start the moderation, the content will have the status Awaiting People, which means that we need to verify the identity of the uploader and confirm they have received the consent of any participants appearing in the content.

If face analysis is enabled after uploader verification the content will change to Face Analysis, the content is now passing through our AI to check if there is someone in the content other than the uploader. If there is someone that have not give the uploader consent the content will change to Awaiting Participant, which means that the uploader should now add other participants. After the participants added the status change to either Awaiting Consent, waiting for participants added give their consent, or to Awaiting Automation.

As soon as the uploader is verified and they confirm they have received the consent of any participants, the content status will change to Awaiting Automation, which will trigger our AI to moderate the content and check that it does not contain any content that should not be published. If we cannot download the video or image you sent, the content will finish the process with the status Failed.

After the AI, the status will change to Awaiting Moderation, and the human moderation is triggered.

Once the content has passed human moderation the status will change to either Approved if the content can be published or Rejected if it cannot be published.

Start Moderation

To start the moderation, you'll need to send us the original video or image uploaded by your customer, some data from your customer so we can trigger reminders and notifications during the process, and a webhook to notify you when the moderation status changes.

Important: Please generate a universally unique identifier (UUID v4) for each customer on your site. You should then use this same customer ID every time you call one of our APIs on behalf of that customer. This UUID should never change. For example, if the customer's username on your site changes, their UUID shouldn't.

Authorization Header

Generate HMAC with: Request BodyAuthorization: hmac YOUR-API-KEY:GENERATED-HMAC

Request parameters

content required

Information about the content being moderated.

type optional

It can be video or image. Defaults to video.

external_id required

A unique identifier on your side to correlate with this moderation.

url required

The URL where we can download the original video or image uploaded by your customer.

title optional

The title of the content being moderated.

description optional

The text written by your customer to describe the content.

redirect_url optional

The URL where we are going to redirect your customer after the moderation is done.

webhook required

This is the URL where we are going to post status updates of the moderation

customer required

Customer information

id required

Customer's unique ID

email required

Customer's email address

phone optional

Customer's phone number

type optional

It can be one of these values. It defaults to moderation.

| Type | Description |

|---|---|

| moderation | We will look for any illegal harmful content. This is also the default option when you don’t send any value. |

| face-match | We will look to see if the expected people are present in the content. |

| combined | We will look for any illegal harmful content and to see if the expected people are present in the content via face-match. |

rule optional

It can be one of the values:

| Rule | Description |

|---|---|

| default | It will look for content that is not allowed in the context of adult websites. This is also the default option when you don't send any value. |

| no-nudity | It will look for both content not allowed in the context of adult websites, but will also look for nudity. |

Response parameters

id

Unique identifier generated by the VerifyMyContent API

redirect_url

URL you’ll need to redirect the user to start the moderation process.

NOTE: If face analysis is enabled and status is Awaiting Participants, this will be the redirect URL for the uploader to add participants.

external_id

The unique identifier you have sent to correlate this moderation

status

It represents the current status of the moderation flow. It can be one of the following:

| Status | Description |

|---|---|

| Awaiting People | Waiting to verify the identity of the uploader. |

| Face Analysis | Our AI is about to process the content to detect any unknown person and request the uploader for participant information. |

| Awaiting Participant | Waiting for the uploader to confirm the participants and provide the necessary information. |

| Awaiting Consent | Waiting to verify the identity of the participants and confirm they have given consent to the content being published. |

| Awaiting Automation | Our AI is about to process the content to detect any illegal or non-consensual content |

| Awaiting Moderation | The moderators are about to confirm the moderation decision. |

| Approved | All participants consented to the publishing of the content. Please check the warning section to see if any unexpected people were detected in the content. |

| Failed | An error occurred during the content processing. |

| Rejected | The content cannot be published as one or more of the participants in the content were unable or unwilling to verify their identity or consent. |

notes

Any notes associated with the moderation when the moderation status is 'Failed'

tags

Any tags associated with the moderation when the moderation status is Rejected.

tags_with_sub

Additional tags with a breakdown of the reason for rejection when the moderation status is rejected.

created_at

The date and time in the UTC timezone when the moderation was created

updated_at

The date and time in the UTC timezone when the moderation was updated

Error responses

| Code | Description |

|---|---|

| 400 | |

| 422 | |

| 401 | |

| 401 | |

| 409 | |

| 500 | |

POST /api/v1/moderation HTTP/1.1

Content-Type: application/json

Authorization: hmac YOUR-API-KEY:GENERATE-HMAC-WITH-REQUEST-BODY

{

"content": {

"type": "video",

"external_id": "YOUR-VIDEO-ID",

"url": "https://example.com/video.mp4",

"title": "Your title",

"description": "Your description"

},

"webhook": "https://example.com/webhook",

"redirect_url": "https://app.verifymycontent.com/v/ABC-123-5678-ABC",

"customer": {

"id": "YOUR-USER-ID",

"email": "[email protected]",

"phone": "+4412345678"

},

"type": "moderation",

"rule": "default"

}

{

"id": "ABC-123-5678-ABC",

"redirect_url": "https://app.verifymycontent.com/v/ABC-123-5678-ABC",

"external_id": "YOUR-CORRELATION-ID",

"status": "Awaiting Automation",

"notes": "Harmful content found.",

"tags": null,

"tags_with_sub": [],

"created_at": "2020-11-12 19:06:00",

"updated_at": "2020-11-12 19:06:00",

"consent_type": "simple"

}Get current status of the moderation

When you already have the id of a moderation you can see the current details of the moderation.

Authorization Header

Generate HMAC with: Request URIAuthorization: hmac YOUR-API-KEY:GENERATED-HMAC

Response parameters

id

Unique identifier generated by the VerifyMyContent API

redirect_url

URL you’ll need to redirect the user to start the moderation process.

NOTE: If face analysis is enabled and status is Awaiting Participants, this will be the redirect URL for the uploader to add participants.

external_id

The unique identifier you have sent to correlate this moderation

status

It represents the current status of the moderation flow. It can be one of the following:

| Status | Description |

|---|---|

| Awaiting People | Waiting to verify the identity of the uploader. |

| Face Analysis | Our AI is about to process the content to detect any unknown person and request the uploader for participant information. |

| Awaiting Participant | Waiting for the uploader to confirm the participants and provide the necessary information. |

| Awaiting Consent | Waiting to verify the identity of the participants and confirm they have given consent to the content being published. |

| Awaiting Automation | Our AI is about to process the content to detect any illegal or non-consensual content |

| Awaiting Moderation | The moderators are about to confirm the moderation decision. |

| Approved | All participants consented to the publishing of the content. Please check the warning section to see if any unexpected people were detected in the content. |

| Failed | An error occurred during the content processing. |

| Rejected | The content cannot be published as one or more of the participants in the content were unable or unwilling to verify their identity or consent. |

notes

Any notes associated with the moderation when the moderation status is 'Failed'

tags

Any tags associated with the moderation when the moderation status is Rejected.

facematch

It represents the results of the face match moderation. It can be the following:

| Status | Description |

|---|---|

| Known | An array of face_ids that contain the expected faces seen in the content. |

| Participant | An array of objects that contain the face_id, bounding box and the time when the participant face was seen in the content. |

| Unknown | An array of objects that contain the bounding box and the time when the unexpected face was seen in the content. |

| Risky | An array of objects that contain the face_id, bounding box and the time when the risky user face was seen in the content. |

created_at

The date and time in the UTC timezone when the moderation was created

updated_at

The date and time in the UTC timezone when the moderation was updated

Error responses

| Code | Description |

|---|---|

| 401 | |

| 403 | |

| 404 | |

| 500 | |

GET /api/v1/moderation/{ID} HTTP/1.1

Content-Type: application/json

Authorization: hmac YOUR-API-KEY:GENERATE-HMAC-WITH-REQUEST-URI{

"id": "ABC-123-5678-ABC",

"redirect_url": "https://app.verifymycontent.com/v/ABC-123-5678-ABC",

"external_id": "YOUR-CORRELATION-ID",

"status": "Awaiting Automation",

"notes": "Harmful content found.",

"tags": null,

"created_at": "2020-11-12 19:06:00",

"updated_at": "2020-11-12 19:06:00",

"consent_type": "simple",

"facematch": {

"known": [],

"participant": [],

"unknown": [],

"not_a_face": [],

"risky": []

}

}Receive the current status of the moderation

The same information that you can get on the moderation detail API can also be sent via webhook to your domain if you send it to us when you create a moderation.

Authorization Header

Generate HMAC with: Request BodyAuthorization: hmac YOUR-API-KEY:GENERATED-HMAC

Request parameters

id

Unique identifier generated by the VerifyMyContent API

redirect_url

URL you’ll need to redirect the user to start the moderation process.

NOTE: If face analysis is enabled and status is Awaiting Participants, this will be the redirect URL for the uploader to add participants.

external_id

The unique identifier you have sent to correlate this moderation

status

It represents the current status of the moderation flow. It can be one of the following:

| Status | Description |

|---|---|

| Awaiting People | Waiting to verify the identity of the uploader. |

| Face Analysis | Our AI is about to process the content to detect any unknown person and request the uploader for participant information. |

| Awaiting Participant | Waiting for the uploader to confirm the participants and provide the necessary information. |

| Awaiting Consent | Waiting to verify the identity of the participants and confirm they have given consent to the content being published. |

| Awaiting Automation | Our AI is about to process the content to detect any illegal or non-consensual content |

| Awaiting Moderation | The moderators are about to confirm the moderation decision. |

| Approved | All participants consented to the publishing of the content. Please check the warning section to see if any unexpected people were detected in the content. |

| Failed | An error occurred during the content processing. |

| Rejected | The content cannot be published as one or more of the participants in the content were unable or unwilling to verify their identity or consent. |

notes

Any notes associated with the moderation when the moderation status is 'Failed'

tags

Any tags associated with the moderation when the moderation status is Rejected.

facematch

It represents the results of the face match moderation. It can be the following:

| Status | Description |

|---|---|

| Known | An array of face_ids that contain the expected faces seen in the content. |

| Participant | An array of objects that contain the face_id, bounding box and the time when the participant face was seen in the content. |

| Unknown | An array of objects that contain the bounding box and the time when the unexpected face was seen in the content. |

| Risky | An array of objects that contain the face_id, bounding box and the time when the risky user face was seen in the content. |

created_at

The date and time in the UTC timezone when the moderation was created

updated_at

The date and time in the UTC timezone when the moderation was updated

POST /your-path HTTP/1.1

Host: https://your-domain.com

Content-Type: application/json

Authorization: hmac YOUR-API-KEY:GENERATE-HMAC-WITH-REQUEST-BODY

{

"id": "ABC-123-5678-ABC",

"redirect_url": "https://app.verifymycontent.com/v/ABC-123-5678-ABC",

"external_id": "YOUR-CORRELATION-ID",

"status": "Rejected",

"notes": "Harmful content found.",

"tags": [

"UNDERAGE"

],

"tags_with_sub": [{

"tag": "UNDERAGE",

"sub_tag": "CSAM"

}],

"facematch": {

"known": [],

"participant": [],

"unknown": [],

"not_a_face": [],

"risky": []

},

"created_at": "2020-11-12 19:06:00",

"updated_at": "2020-11-12 19:06:00",

"consent_type": "simple"

}